Solana Prediction Markets: Hidden Security Tradeoffs of Speed

Explore Solana prediction markets and the hidden security tradeoffs behind high-speed execution and low-latency design in detail.

Solana’s speed and near-instant block times make it a natural fit for prediction markets, where real-time trading and fast settlement really matter. But that performance comes with trade-offs. The same design choices that power Solana, Proof-of-History, the Sealevel parallel runtime, leader-scheduled blocks, and its account model also create security challenges you won’t run into on slower chains like Ethereum.

This piece focuses on those Solana-specific risks. We break down how they arise at a system level, how they can be exploited in practice, and what prediction market teams should do to defend against them before shipping to mainnet.

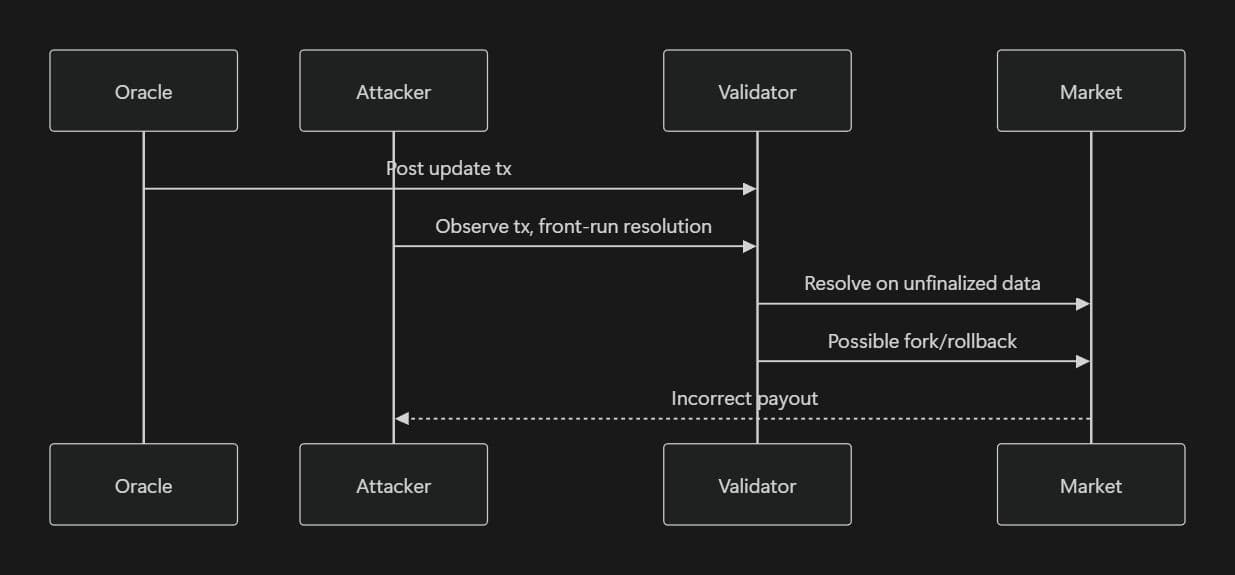

Oracle Finality vs Transaction Finality

Solana can give you an optimistic confirmation in about 400 milliseconds, because validators process slots locally and very quickly. But that’s not the same as true finality. In practice, Solana needs around 32 consecutive confirmations before a transaction is economically safe from reorgs. That usually takes 12–13 seconds, and during congestion or validator churn, it can stretch past 25 seconds.

Oracles add another layer of timing complexity. Systems like Pyth, Switchboard, and Chainlink typically collect data off-chain from multiple publishers and then push a single verified update on-chain. That posting process introduces its own delay, often a few seconds, and it doesn’t neatly line up with Solana’s finality window.

When these timelines drift out of sync, a dangerous race condition appears. An attacker watching the current leader’s pending transactions can spot an incoming oracle update and quickly submit a market resolution transaction before the oracle data is fully finalized. If the prediction market settles during this optimistic window, it risks resolving on information that later gets rolled back in a fork, opening the door to incorrect payouts, double-spends, or outright loss of funds.

Vulnerable pattern (Anchor):

1if Clock::get()?.slot >= market.resolution_slot {

2 // resolve immediately – dangerous in optimistic window

3}

Required mitigation:

1require!(

2 Clock::get()?.slot >= market.oracle_slot + 40, // >32 + safety margin for congestion spikes

3 ErrorCode::InsufficientFinality

4);Security researchers should actively test how their systems behave during forks by simulating reorgs in local environments. This can be done by running solana-test-validator with a short epoch length (for example, --slots-per-epoch 32) and using slot warping to force realistic reorganization scenarios.

Beyond local testing, teams should also look at real network behavior. Analyzing historical RPC data can help model how finality delays change under load, and those insights can be used to dynamically increase safety buffers during periods of congestion or validator instability.

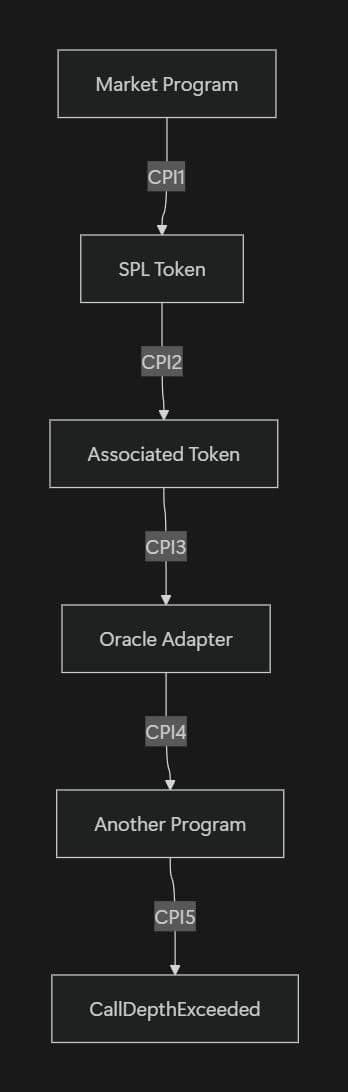

CPI Call Depth Limits

Solana puts firm limits on how deep programs can call into each other. Cross-Program Invocations are capped at four nested calls, and the total call stack, including internal function calls, can’t exceed 64 frames. These limits exist to keep the Sealevel runtime safe and predictable, but they can surprise teams building more complex applications.

Prediction markets are especially prone to hitting these ceilings. Settlement logic often needs to touch many pieces at once: resolving related markets, moving tokens for payouts, reading oracle data, checking rent sysvars, and updating multiple derived accounts. When this logic is written in a deeply nested or recursive way, it’s easy to run into call depth limits, causing transactions to fail in production even though the code looks correct on paper.

Attackers can exploit call depth limits by creating markets that depend on one another in carefully crafted ways. When the protocol tries to resolve these markets in a single flow, it can be pushed into deep or recursive execution paths that exceed Solana’s limits. The result isn’t a flashy exploit, it’s worse, batch settlements fail, transactions revert, and user funds remain stuck until someone steps in and resolves the situation manually.

Design multi-market resolution logic to be flat and predictable. Break complex workflows into small, iterative steps and process them across multiple transactions when needed. Keep CPI depth to three or fewer in any performance critical path, and avoid recursion altogether, it’s brittle under real network conditions and easy to abuse.

1for market in markets.iter() {

2 invoke(&settle_instruction(*market), &accounts)?;

3 // Each iteration is shallow, reset stack

4}Researchers should audit CPI traces using --log-cpi flags in local validator tests and simulate large-scale settlements with solana-program-test to identify hidden depth explosions.

Account Rent and State Pruning Risks

On Solana, accounts need to stay rent exempt to survive. If an account doesn’t hold enough lamports to cover its size, the network starts charging rent every epoch (roughly every few days). If the balance ever hits zero, the account isn’t just paused, it’s garbage collected, and the data is gone for good.

This is especially risky for long-running prediction markets, like multi-month geopolitical or climate forecasts. As bets and metadata accumulate, account sizes grow, which increases the rent required to keep them alive. Without careful planning, a market can slowly bloat its own state until rent drains the account and critical, unresolved data is wiped out, breaking the market’s integrity entirely.

An attacker doesn’t need a sophisticated exploit here. By spamming many small, low-value bets or interactions, they can artificially inflate account size, speeding up rent depletion, particularly in low-liquidity markets where buffers are thin.

Mitigation:

1let rent = Rent::get()?;

2let required = rent.minimum_balance(account_data_len + buffer);

3require!(account.lamports() >= required, ErrorCode::InsufficientRent);Implement automated off-chain monitoring (e.g., cron jobs or bots) that top up rent-exempt balances via CPI when size growth is detected. Always over-provision a conservative buffer (e.g., 20–50%) and periodically reclaim rent from closed markets using close instructions.

Parallel Execution and Optimistic Concurrency Control

Sealevel is designed to run many transactions at the same time, as long as they don’t touch the same accounts. But if a transaction tries to write to an account that’s already being modified elsewhere or forgets to declare that access up front the runtime will abort it with an AccountInUse error.

In high-volume prediction markets, this shows up fast. Shared PDAs, like a single outcome or market state account, become hot under load. Instead of benefiting from parallel execution, transactions pile up and get forced through one by one. That serialization doesn’t just slow things down, it creates predictable timing windows that MEV actors can exploit.

Be explicit and deliberate about account access. Always declare mutable accounts correctly, avoid funneling traffic through a single PDA, and consider versioned PDAs (using bump seeds plus a nonce) or sharding state across per-user or per-outcome accounts. Before shipping, stress-test the system under simulated concurrency with multi-threaded tooling to understand where conflicts actually occur.

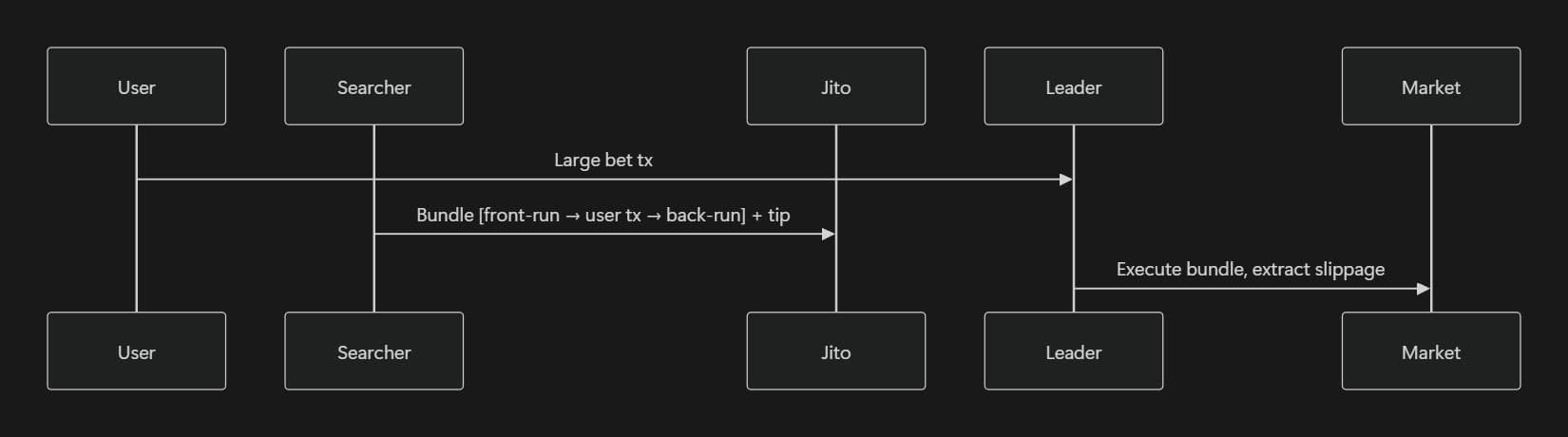

MEV and Ordering Attacks

Solana’s transaction flow gives block leaders a lot of influence over ordering. When you combine leader scheduling with priority fees and Jito bundles, it becomes possible to deliberately reorder transactions inside a block.

In prediction markets, this creates an obvious target, large bets that materially move the odds. Searchers can spot these trades and wrap them with their own transactions, one just before and one just after, to capture the slippage the bet creates. The user still gets filled, but at a worse price, while the attacker quietly extracts value in the middle.

There’s no single fix for transaction reordering, so protocols need to layer their defenses. Techniques like commit–reveal flows or VRF-based randomized ordering make it harder for searchers to predict or target individual trades. Using market maker designs that are less sensitive to large, single orders can also reduce the value available to attackers. On the infrastructure side, integrating Jito’s anti-sandwich bundle features or routing sensitive transactions through private submission paths can significantly narrow the attack surface.

To validate these defenses, researchers shouldn’t rely on theory alone. Actively simulate MEV extraction using tools like solana-bench-tps and Jito clients, then inspect real block data via getBlock RPC calls to see how bundles are included and reordered under load.

Building a Prediction Market on Solana? Don’t Ship Blind

Building a Prediction Market on Solana? Don’t Ship Blind

Reorg windows, oracle race conditions and parallel execution bugs don’t show up in basic tests. Our audits simulate real Solana network behavior to catch them early.

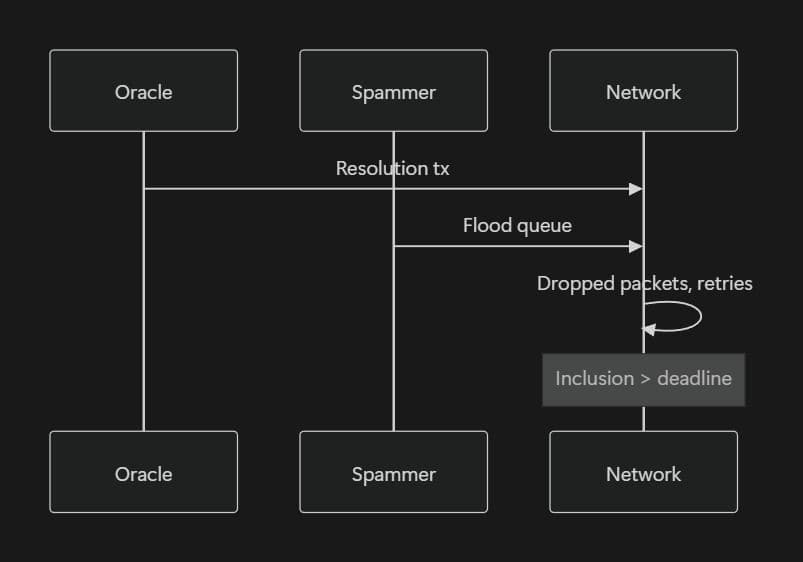

Network Congestion and Inclusion Failures

When the network gets congested, transactions don’t just get slower, they become unreliable. QUIC packet loss and overloaded leader queues can turn what should be millisecond-level inclusion into delays lasting minutes, or cause transactions to fail.

For prediction markets that depend on tight resolution deadlines, this is dangerous. An attacker doesn’t need to manipulate prices or data, they can simply flood the network and prevent oracle updates from landing in time. If the deadline passes without the update, the market can be forced into an incorrect or stalled resolution, a classic griefing attack driven by congestion rather than clever contract logic.

To stay reliable under congestion, protocols need to adapt in real time. Dynamically increasing priority fees, using mechanisms like set_compute_unit_pricehelps critical transactions compete when leader queues are full. Off-chain monitoring can add another safety layer, by watching network health signals, resolution windows can be automatically extended when conditions deteriorate instead of failing hard.

Relying on a single oracle or a tight deadline is brittle under stress. Using multiple oracles with voting and clearly defined fallback deadlines gives the system more room to recover when updates are delayed.

Finally, don’t assume this will work just because it looks good on paper. Stress-test under simulated congestion and review validator-level QUIC logs to understand how transactions behave when the network is actually under pressure.

Solana-Native Security Checklist for Prediction Markets

Building prediction markets on Solana means leaning into its strengths without getting blindsided by its edge cases. The checklist below captures the most common failure modes teams run into and how to avoid them.

- Delay market resolution past true finality. Only resolve markets after the oracle update slot plus a healthy buffer (e.g. ≥40 slots) to account for economic finality and congestion-related delays.

- Keep execution shallow and predictable. Limit CPI depth to three or fewer calls. Use iterative settlement loops instead of recursion to avoid stack overflows and hard-to-debug runtime failures.

- Stay safely rent-exempt at all times. Continuously calculate required rent as account state grows, add buffers, and auto top up long lived accounts before rent deductions become a problem.

- Be explicit about account access. Declare all read/write accounts up front, and use versioned PDAs to reduce write contention and parallel execution conflicts.

- Design for MEV resistance, not hope. Large trades will be targeted. Use commit–reveal flows, VRFs, CFMM-style pricing, or private RPC paths to reduce extractable value.

- Don’t trust a single oracle. Require quorum-based updates from multiple sources, with on-chain signature verification to prevent single-point failures.

- Adapt to congestion instead of failing. Increase priority fees dynamically and monitor RPC health so the protocol can extend deadlines or adjust behavior when the network degrades.

- Test like the network is hostile. Simulate forks, congestion, and high account-conflict scenarios using validator warping and spam tooling, not just happy-path unit tests.

- Audit compute usage under worst-case paths. Settlement logic that barely fits under normal conditions can be weaponized into a denial-of-service during peak load.

- Lock down upgradeability. Use timelocked governance, minimize upgrade surfaces, and keep core logic immutable wherever possible.

Conclusion

Solana’s performance advantages are real, but they come with sharp edges. Teams that treat Solana as Ethereum, just faster, tend to ship systems that fail under real network conditions. The risks outlined here aren’t hypothetical, many have already caused losses in production of DeFi protocols.

Building secure prediction markets on Solana requires conservative assumptions, aggressive testing and designs that remain correct during congestion, forks and adversarial conditions. The most reliable teams take this a step further by having independent experts stress-test their assumptions early, so they can secure solana programs before these edge cases turn into costly incidents.

Contents